Chapter 8 Advanced raster analysis

8.1 Learning objectives

By the end of this practical you should be able to:

- Explain and execute appropriate pre-processing steps of raster data

- Replicate published methodologies using raster data

- Design new R code to undertake further analysis

8.2 Homework

Outside of our scheduled sessions you should be doing around 12 hours of extra study per week. Feel free to follow your own GIS interests, but good places to start include the following:

Exam

Each week we will provide a short task to test your knowledge, these should be used to guide your study for the final exam.

For the task this week have a go at the practice exam questions, you should be able to answer any of the practice questions:

The actual exam will be distributed using the same format, so it’s vital to do this.

Reading

This week:

Appendix “Raster operations in R” from Intro to GIS and Spatial Analysis by Gimond (2019)

Raster manipulation from Spatial data science by Hijmans (2016). This last one is another tutorial — it seems there aren’t any decent free raster textbook chapters, let me know if you find one.

Remember this is just a starting point, explore the reading list, practical and lecture for more ideas.

8.3 Recommended listening 🎧

Some of these practicals are long, take regular breaks and have a listen to some of our fav tunes each week.

Andy Hamilton.

Adam Straight outa somewhere near Grantham - this week it has to be the Sleaford Mods!

8.4 Introduction

Within this practical we are going to be using data from the Landsat satellite series provided for free by the United States Geological Survey (USGS) to replicate published methods. Landsat imagery is the longest free temporal image repository of consistent medium resolution data. It collects data at each point on Earth each every 16 days (temporal resolution) in a raster grid composed of 30 by 30 m cells (spatial resolution). Geographical analysis and concepts are becoming ever more entwined with remote sensing and Earth observation. Back when I was an undergraduate there was specific software for undertaking certain analysis, now you can basically use any GIS. I see remote sensing as the science behind collecting the data with spatial data analysis then taking that data and providing meaning. So whilst i’ll give a background to Landsat data and remote sensing, this practical will focus on more advanced methods for analysing and extracting meaning from raster data.

8.5 .gitignore

If you are using Git with your project and have large data it’s best to set up a .gitignore file. This file tells git to ignore certain files so they aren’t in your local repository that is then pushed to GitHub. If you try and push large files to GitHub you will get an error and could run into lots of problems, trust me, i’ve been there. Look at my .gitignore for this repository and you will notice:

prac8_data/Lsatdata/*

prac8_data/exampleGoogleDrivedata/*

prac8_data/Manchester_boundary/*

This means igore all files within these folders, all is denoted by the *. The .gitignore has to be in your main project folder, if you have made a a git repo for your project then you should have a .gitignore so all you have to do is open it and add the folder or file paths you wish to ignore.

GitHub has a maximum file upload size of 50mb….

](prac8_images/gitignore.jpg)

Figure 8.1: GitHub maximum file upload of 50mb. Source: reddit r/ProgrammerHumor

8.5.1 Remote sensing background (required)

- Landsat is raster data

- It has pixels of 30 by 30m collected every 16 days with global coverage

- As humans we see in the visible part of the electromagnetic spectrum (red-green-blue) Landsat takes samples in these bands and several more to make a spectral signature for each pixel (see image below)

- Each band is provided as a separate

.TIFFraster layer

](prac8_images/specsig.jpg)

Figure 8.2: Spectral signatures of different landcover types. Source: Introduction to Categorisation of Objects from their Data, Science Education through Earth Observation for High Schools (SEOS)

Later on we will compute temperature from the Landsat data, whilst i refer to and explain each varaiable created don’t get overwhelmed by it, take away the process of loading and manipulating raster data in R to extract meaningful information. An optional remote sensing background containing additional information can be found at the end of this practical should you wish to explore this further.

8.6 Data

8.6.1 Shapefile

The shapefile of Manchester is available from the data folder for this week on GitHub. To download this consult How to download data and files from GitHub, i’d used Option 1.

8.6.2 Raster data (Landsat)

To access the Landsat data we will use in this practical you can either:

- Sign up for a free account at: https://earthexplorer.usgs.gov/.

- Use the Landsat data provided on Moodle — this will be available only if the earth explorer website is down (e.g. in the case of US government shutdowns)

To download the data:

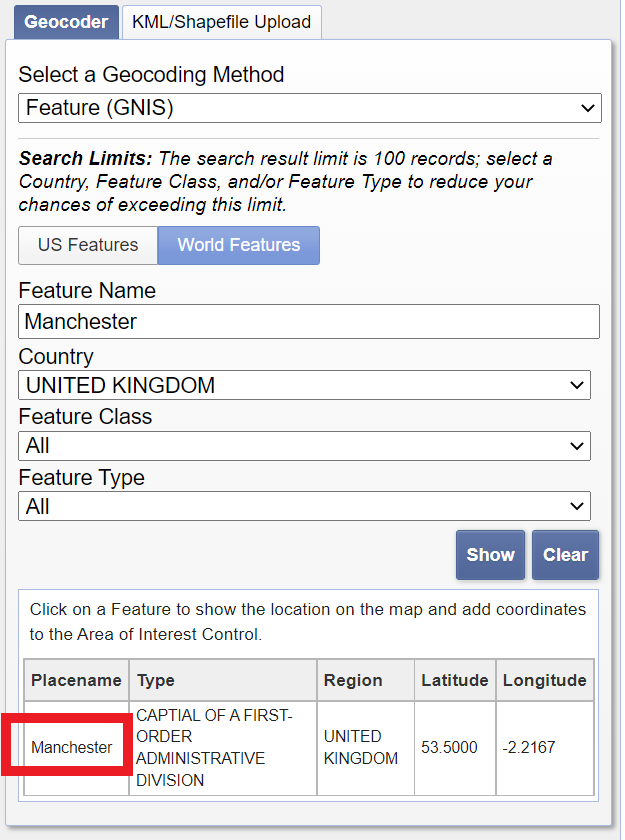

Enter Manchester in the address/place box > select the country as United Kingdom > click Show and then click on the word Manchester in the box that appears.

Select the date range between the 12/5/2019 and 14/5/2019 — it’s a US website so check the dates are correct.

Click dataset and select Landsat, then Landsat Collection 1 Level-1, check Landsat 8 (level 2 is surface reflectance — see Remote sensing background (optional)

Click results, there should be one image (GEOTiff), download it..it might take a while

Landsat data comes zipped twice as a

.tar.gz. Use 7Zip or another file extractor, extract it once to get to a.tarthen extract again and files should appear. Or the code below will also let you extract Landsat data…

8.6.2.1 Alternative raster data

Occasionally the earth explorer website can go down for maintenance or during government shutdowns. If possible I strongly advise you to learn how to use its interface as multiple other data providers have similar interfaces. GitHub also place a strict size limit on files of 100MB. However, in order to account for situations like this I’ve placed the zipped file on GoogleDrive and will demonstrate how to access this from R using the new googledrive package.

This could be a great option for you to gain reproducibility points if you have large files that you can’t upload to GitHub.

In GoogleDrive you need to ensure your file is shareable with others — right click on it > Share > then copy the link. I have done this for my file in the example below, but if you try and replicate this, make sure you’ve done it otherwise it might not work when other people try and run your code, as they won’t have access to the file on your GoogleDrive.

Depending on your internet speed this example might take some time…

Be sure to change the path to your practical 7 folder but make sure you include the filename within it and set overwrite to T (or TRUE) if you are going to run this again.

library("googledrive")

o<-drive_download("https://drive.google.com/open?id=1MV7ym_LW3Pz3MxHrk-qErN1c_nR0NWXy",

path="prac8_data/exampleGoogleDrivedata/LC08_L1TP_203023_20190513_20190521_01_T1.tar.gz",

overwrite=T)Next we need to uncompress and unzip the file with untar(), first list the files that end in the extension .gz then pass that to untar with the pipe %>% remember this basically means after this function… then…do this other function with that data

library(tidyverse)

library(fs)

library(stringr)

library(utils)

listfiles<-dir_info(here::here("prac8_data", "exampleGoogleDrivedata")) %>%

dplyr::filter(str_detect(path, ".gz")) %>%

dplyr::select(path)%>%

dplyr::pull()%>%

#print out the .gz file

print()%>%

as.character()%>%

utils::untar(exdir=here::here("prac8_data", "exampleGoogleDrivedata"))8.7 Processing raster data

8.7.1 Loading

Today, we are going to be using a Landsat 8 raster of Manchester. The vector shape file for Manchester has been taken from an ESRI repository.

- Let’s load the majority of packages we will need here.

## listing all possible libraries that all presenters may need following each practical

library(sp)

library(raster)

library(rgeos)

library(rgdal)

library(rasterVis)

library(ggplot2)

library(terra)

library(sf)- Now let’s list all our Landsat bands except band 8 (i’ll explain why next) along with our study area shapefile. Each band is a separate

.TIFfile.

library(stringr)

library(raster)

library(fs)

library(sf)

library(tidyverse)

# List your raster files excluding band 8 using the patter argument

listlandsat<-dir_info(here::here("prac8_data", "Lsatdata"))%>%

dplyr::filter(str_detect(path, "[B123456790].TIF")) %>%

dplyr::select(path)%>%

pull()%>%

as.character()%>%

# Load our raster layers into a stack

raster::stack()

# Load the manchester boundary

manchester_boundary <- st_read(here::here("prac8_data",

"Manchester_boundary",

"manchester_boundary.shp"))## Reading layer `manchester_boundary' from data source

## `C:\Users\Andy\OneDrive - University College London\Teaching\CASA0005\2022_2023\CASA0005repo\prac8_data\Manchester_boundary\manchester_boundary.shp'

## using driver `ESRI Shapefile'

## Simple feature collection with 1 feature and 5 fields

## Geometry type: POLYGON

## Dimension: XY

## Bounding box: xmin: 540926.6 ymin: 5917117 xmax: 558663.4 ymax: 5933117

## Projected CRS: WGS 84 / UTM zone 30N#check they have the same Coordinate Reference System (CRS)

crs(manchester_boundary)## Coordinate Reference System:

## Deprecated Proj.4 representation:

## +proj=utm +zone=30 +datum=WGS84 +units=m +no_defs

## WKT2 2019 representation:

## PROJCRS["WGS 84 / UTM zone 30N",

## BASEGEOGCRS["WGS 84",

## DATUM["World Geodetic System 1984",

## ELLIPSOID["WGS 84",6378137,298.257223563,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4326]],

## CONVERSION["UTM zone 30N",

## METHOD["Transverse Mercator",

## ID["EPSG",9807]],

## PARAMETER["Latitude of natural origin",0,

## ANGLEUNIT["Degree",0.0174532925199433],

## ID["EPSG",8801]],

## PARAMETER["Longitude of natural origin",-3,

## ANGLEUNIT["Degree",0.0174532925199433],

## ID["EPSG",8802]],

## PARAMETER["Scale factor at natural origin",0.9996,

## SCALEUNIT["unity",1],

## ID["EPSG",8805]],

## PARAMETER["False easting",500000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8806]],

## PARAMETER["False northing",0,

## LENGTHUNIT["metre",1],

## ID["EPSG",8807]]],

## CS[Cartesian,2],

## AXIS["(E)",east,

## ORDER[1],

## LENGTHUNIT["metre",1]],

## AXIS["(N)",north,

## ORDER[2],

## LENGTHUNIT["metre",1]],

## ID["EPSG",32630]]crs(listlandsat)## Coordinate Reference System:

## Deprecated Proj.4 representation:

## +proj=utm +zone=30 +datum=WGS84 +units=m +no_defs

## WKT2 2019 representation:

## PROJCRS["WGS 84 / UTM zone 30N",

## BASEGEOGCRS["WGS 84",

## DATUM["World Geodetic System 1984",

## ELLIPSOID["WGS 84",6378137,298.257223563,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4326]],

## CONVERSION["UTM zone 30N",

## METHOD["Transverse Mercator",

## ID["EPSG",9807]],

## PARAMETER["Latitude of natural origin",0,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8801]],

## PARAMETER["Longitude of natural origin",-3,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8802]],

## PARAMETER["Scale factor at natural origin",0.9996,

## SCALEUNIT["unity",1],

## ID["EPSG",8805]],

## PARAMETER["False easting",500000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8806]],

## PARAMETER["False northing",0,

## LENGTHUNIT["metre",1],

## ID["EPSG",8807]]],

## CS[Cartesian,2],

## AXIS["(E)",east,

## ORDER[1],

## LENGTHUNIT["metre",1]],

## AXIS["(N)",north,

## ORDER[2],

## LENGTHUNIT["metre",1]],

## USAGE[

## SCOPE["Engineering survey, topographic mapping."],

## AREA["Between 6°W and 0°W, northern hemisphere between equator and 84°N, onshore and offshore. Algeria. Burkina Faso. Côte' Ivoire (Ivory Coast). Faroe Islands - offshore. France. Ghana. Gibraltar. Ireland - offshore Irish Sea. Mali. Mauritania. Morocco. Spain. United Kingdom (UK)."],

## BBOX[0,-6,84,0]],

## ID["EPSG",32630]]8.7.2 Resampling

- There is an error with this dataset as band 8 does not fully align with the extent of the other raster layers. There are several ways to fix this, but in this tutorial we will resample the band 8 layer with the extent of band 1. First, read in the band 8 and store it as a raster.

# get band 8

b8list<-dir_info(here::here("prac8_data", "Lsatdata"))%>%

dplyr::filter(str_detect(path, "[B8].TIF")) %>%

dplyr::select(path)%>%

pull()%>%

as.character()%>%

raster()Then, resample() and write out the new layer, resampling takes awhile, so please be patient or find my output on GitHub.

## ngb is a nearest neighbour sampling method

b8correct <- b8list%>%

resample(., listlandsat$LC08_L1TP_203023_20190513_20190521_01_T1_B1,

method = "ngb") %>%

# Write out the raster

writeRaster(.,str_c(here::here("prac8_data",

"Lsatdata"),

names(b8list),

sep="/"),

format='GTiff',

overwrite=TRUE)- Load band 8 and add it to our raster stack

b8backin<-dir_info(here::here("prac8_data", "Lsatdata"))%>%

dplyr::filter(path == "C:/Users/Andy/OneDrive - University College London/Teaching/CASA0005/2022_2023/CASA0005repo/prac8_data/Lsatdata/LC08_L1TP_203023_20190513_20190521_01_T1_B8.tif") %>%

dplyr::select(path)%>%

pull()%>%

as.character()%>%

raster()

listlandsat <- listlandsat %>%

addLayer(., b8backin)- We can compare it to see if both rasters have the same extent, number of rows and columns, projection, resolution and origin

raster::compareRaster(listlandsat$LC08_L1TP_203023_20190513_20190521_01_T1_B1,

listlandsat$LC08_L1TP_203023_20190513_20190521_01_T1_B8)## [1] TRUE8.7.3 Clipping

- Our raster is currently the size of the scene which satellite data is distributed in, to clip it to our study area it’s best to first crop it to the extent of the shapefile and then mask it as we have done in previous practicals…

lsatmask <- listlandsat %>%

# now crop our temp data to the extent

raster::crop(.,manchester_boundary)%>%

raster::mask(., manchester_boundary)- If all we wanted to do was clip our data, we could now change our filenames in the raster stack and write the

.TIFFfiles out again…

# add mask to the filenames within the raster stack

names(lsatmask) <- names(lsatmask)%>%

str_c(.,

"mask",

sep="_")

# I need to write mine out in another location

outputfilenames <-

str_c("prac8_data/Lsatdata/", "mask/", names(lsatmask) ,sep="")In the first line of code i’m taking the original names of the raster layers and adding “mask” to the end of them. This is done using str_c() from the stringr package and the arguments

names(lsatmask): original raster layer names"mask": what i want to add to the namessep="": how the names and “mask” should be seperated — “” means no spaces

As i can’t upload my Landsat files to GitHub i’m storing them in a folder that is not linked (remember this is all sotred on GitHub) – so you won’t find prac8_data/Lsatdata on there. If you want to store your clipped Landsat files in your project directory just use:

lsatmask %>%

writeRaster(., names(lsatmask),

bylayer=TRUE,

format='raster',

overwrite=TRUE)For me though it’s:

lsatmask %>%

raster::writeRaster(., outputfilenames,

bylayer=TRUE,

format='raster',

overwrite=TRUE)Here i write out each raster layer individually though specifying bylayer=TRUE. For some reason using the format=GTiff causes an error so i have changed this to the native raster format from the raster pacakge - it doesn’t really matter as all GIS software can read all types.

8.8 Data exploration

8.8.1 More loading and manipulating

- For the next stage of analysis we are only interested in bands 1-7, we can either load them back in from the files we just saved or take them directly from the original raster stack.

# either read them back in from the saved file:

manc_files<-dir_info(here::here("prac8_data", "Lsatdata", "mask")) %>%

dplyr::filter(str_detect(path, "[B1234567]_mask.grd")) %>%

dplyr::filter(str_detect(path, "B11", negate=TRUE))%>%

dplyr::select(path)%>%

pull()%>%

stack()

# or extract them from the original stack

manc<-stack(lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B1_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B2_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B3_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B4_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B5_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B6_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B7_mask)

# Name the Bands based on where they sample the electromagentic spectrum

names(manc) <- c('ultra-blue', 'blue', 'green', 'red', 'NIR', 'SWIR1', 'SWIR2') - If you want to extract specific information from a raster stack use:

crs(manc) # projection

extent(manc) # extent

ncell(manc) # number of cells

dim(manc) # number of rows, columns, layers

nlayers(manc) # number of layers

res(manc) # xres, yres8.8.2 Plotting data

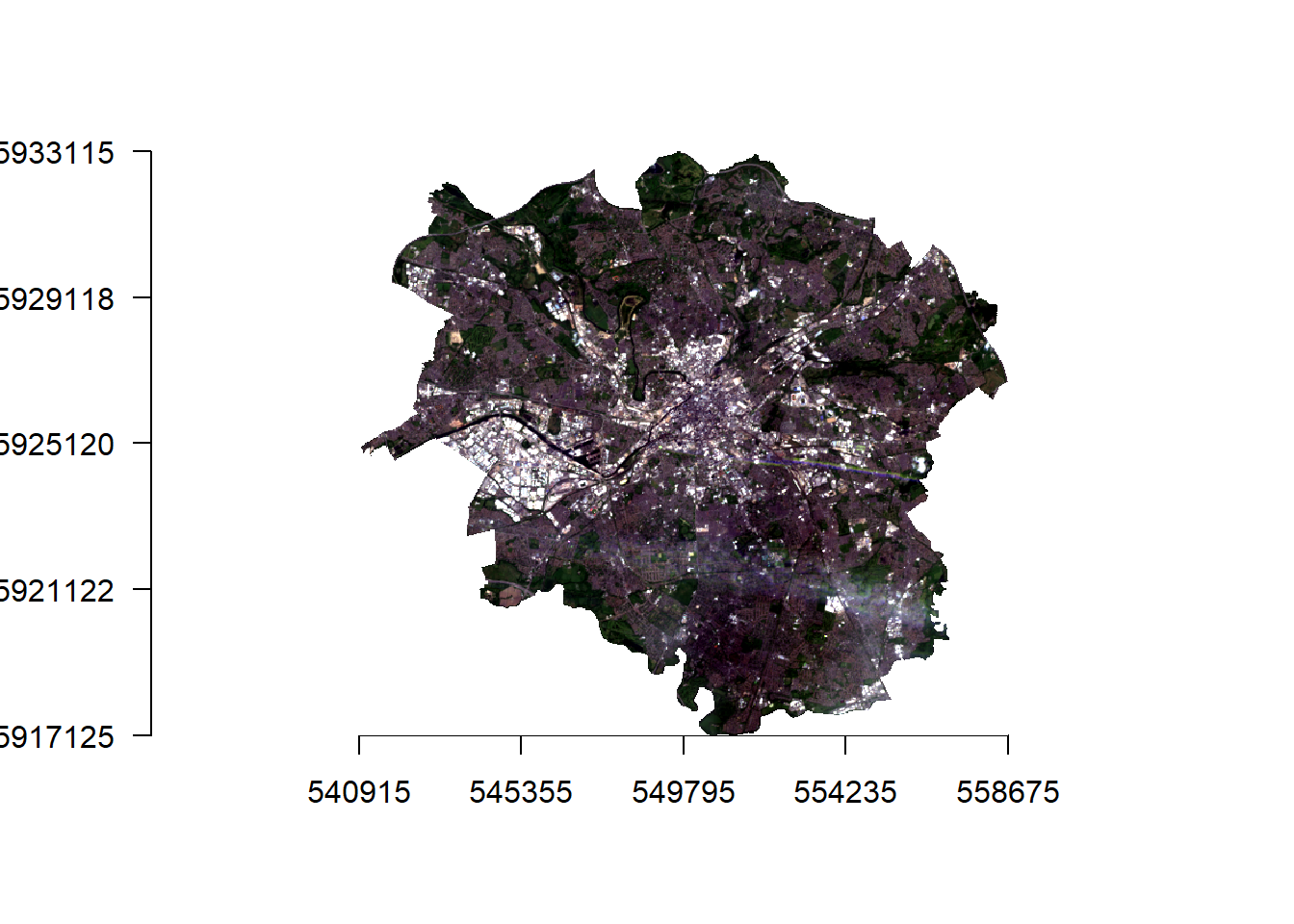

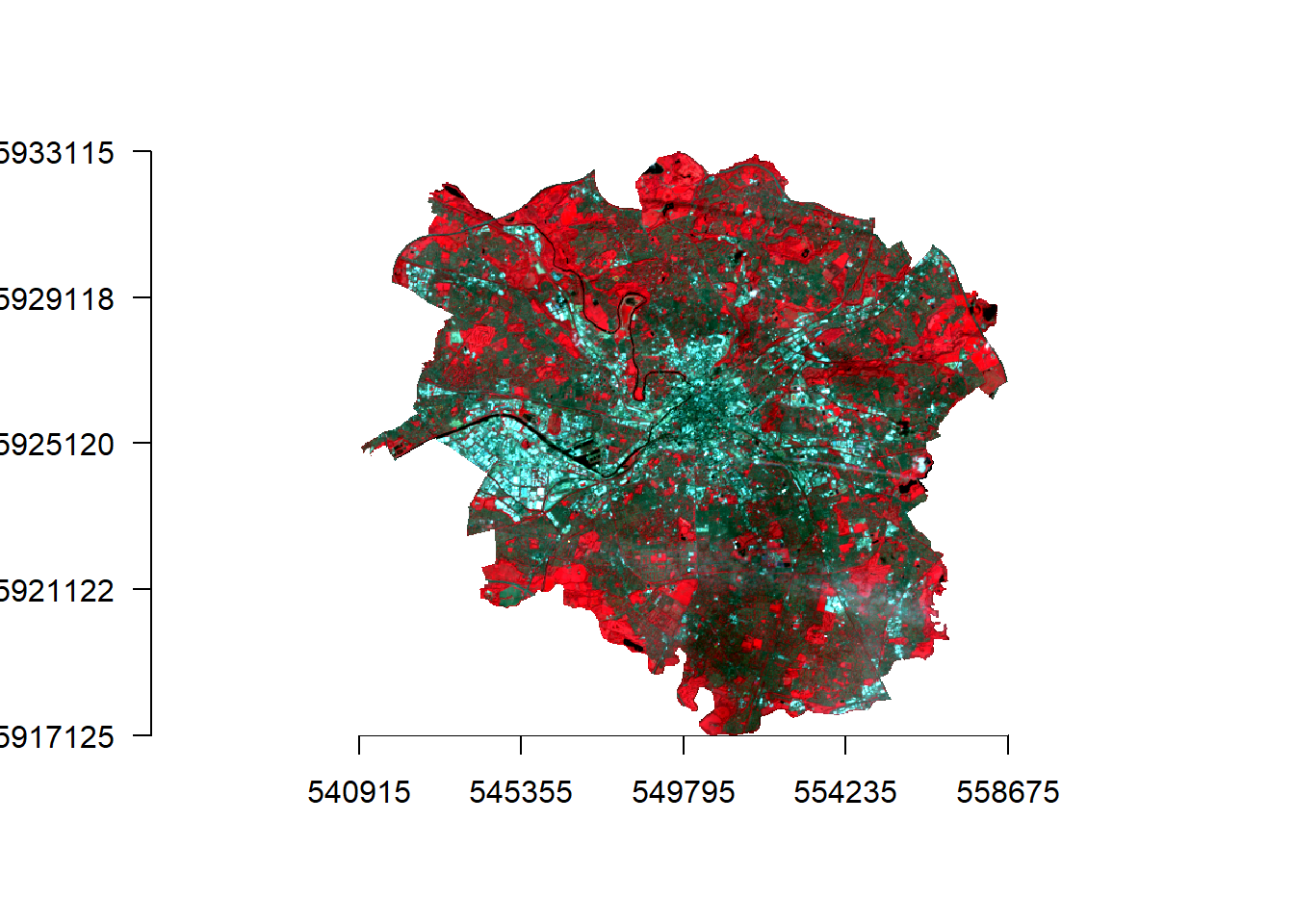

- Let’s actually have a look at our raster data, first in true colour (how humans see the world) and then false colour composites (using any other bands but not the combination of red, green and blue).

# true colour composite

manc_rgb <- stack(manc$red, manc$green, manc$blue)

# false colour composite

manc_false <- stack(manc$NIR, manc$red, manc$green)

manc_rgb %>%

plotRGB(.,axes=TRUE, stretch="lin")

manc_false %>%

plotRGB(.,axes=TRUE, stretch="lin")

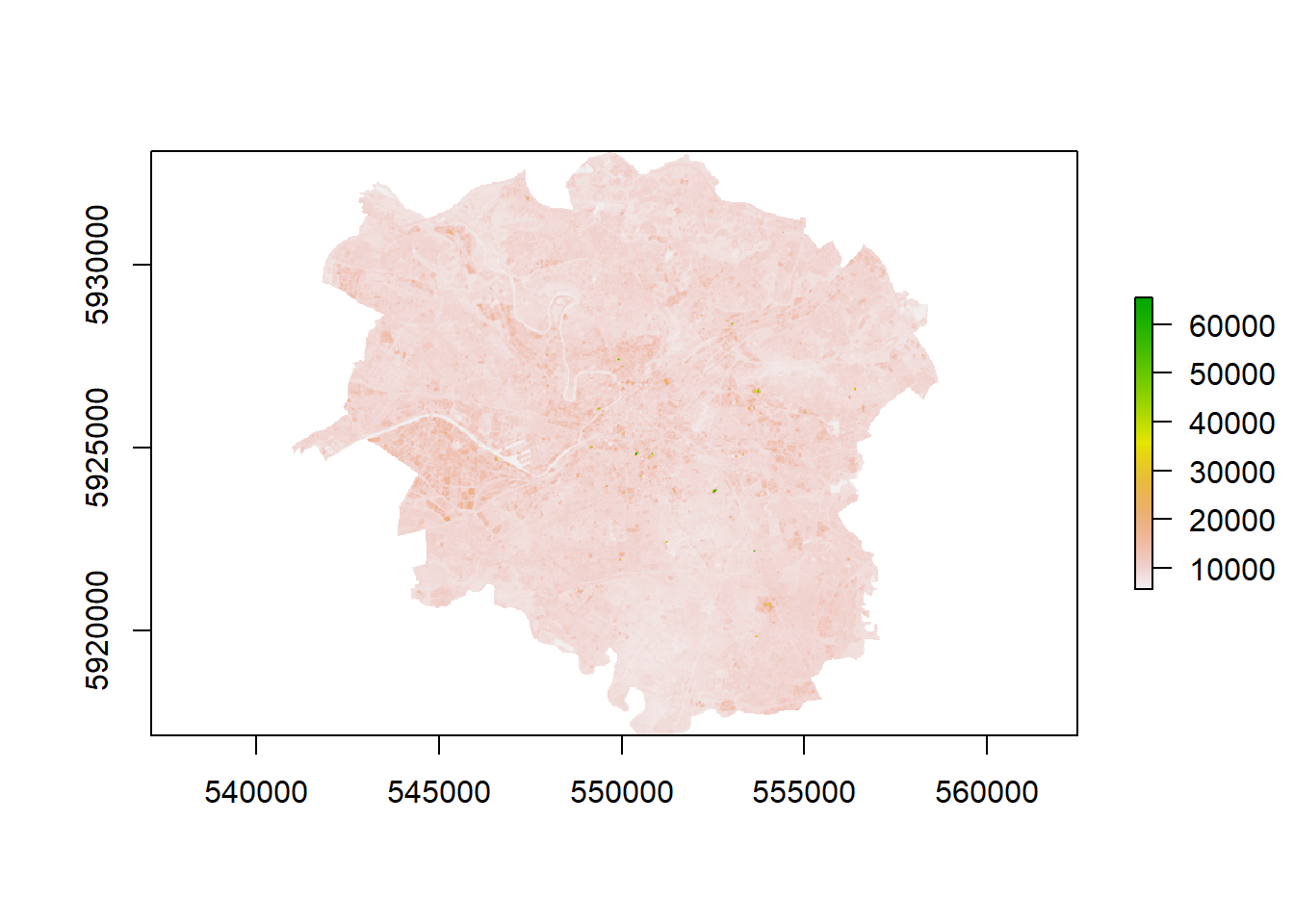

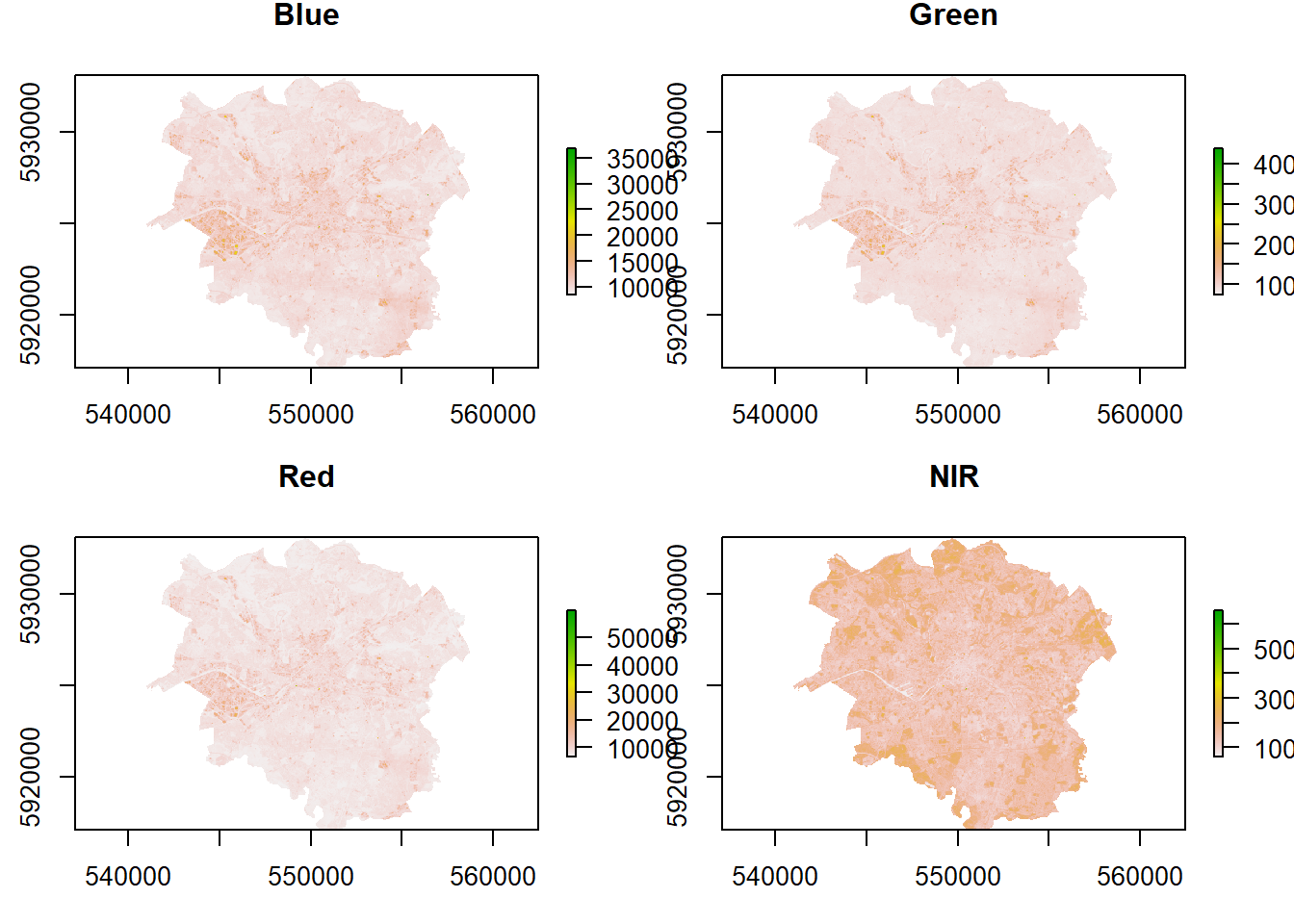

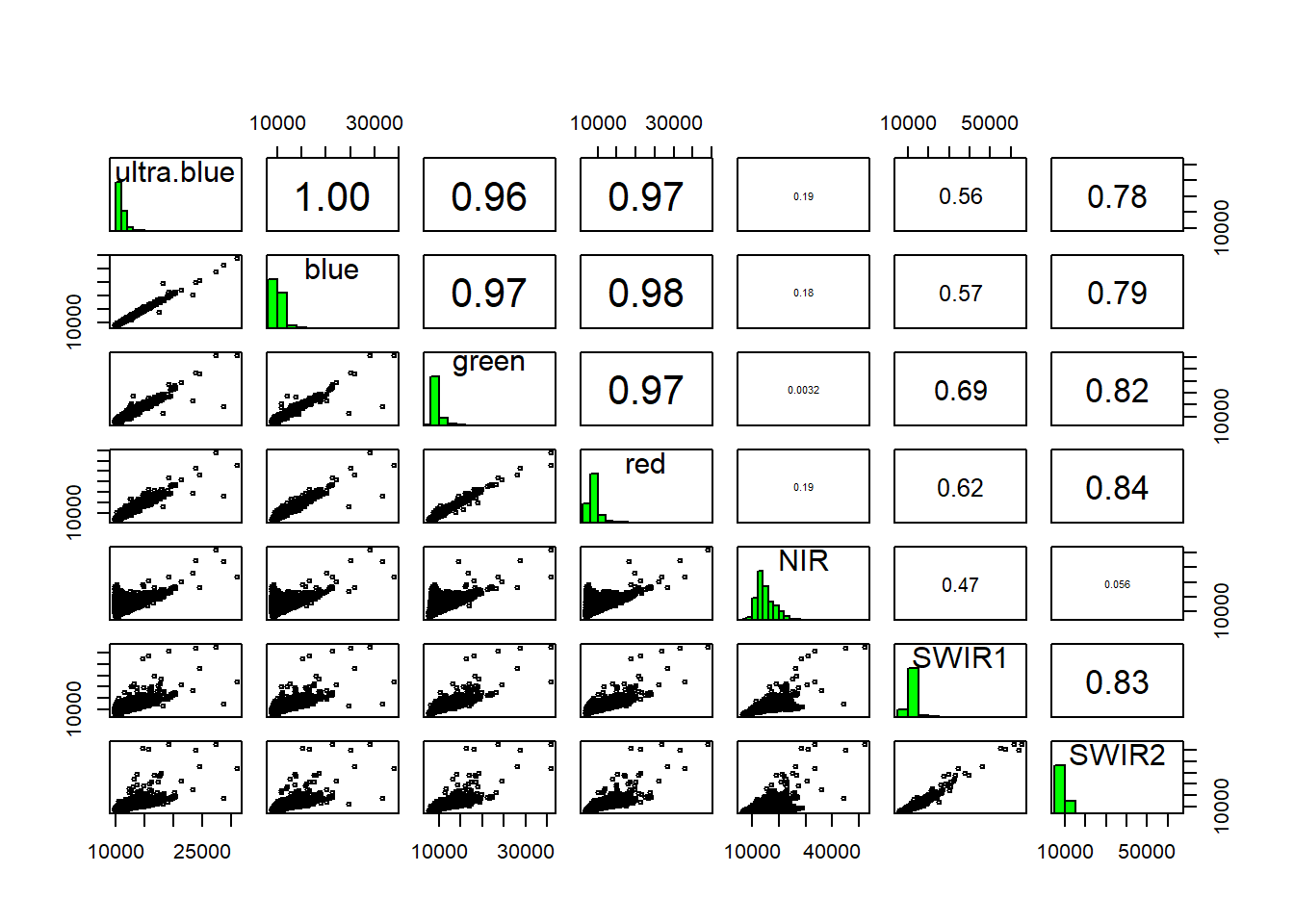

8.8.3 Data similarity

- What if you wanted to look at signle bands and also check the similarity between bands?

# Looking at single bands

plot(manc$SWIR2)

## How are these bands different?

#set the plot window size (2 by 2)

par(mfrow = c(2,2))

#plot the bands

plot(manc$blue, main = "Blue")

plot(manc$green, main = "Green")

plot(manc$red, main = "Red")

plot(manc$NIR, main = "NIR")

## Look at the stats of these bands

pairs(manc[[1:7]])## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

## Warning in graphics::par(usr): argument 1 does not name a graphical parameter

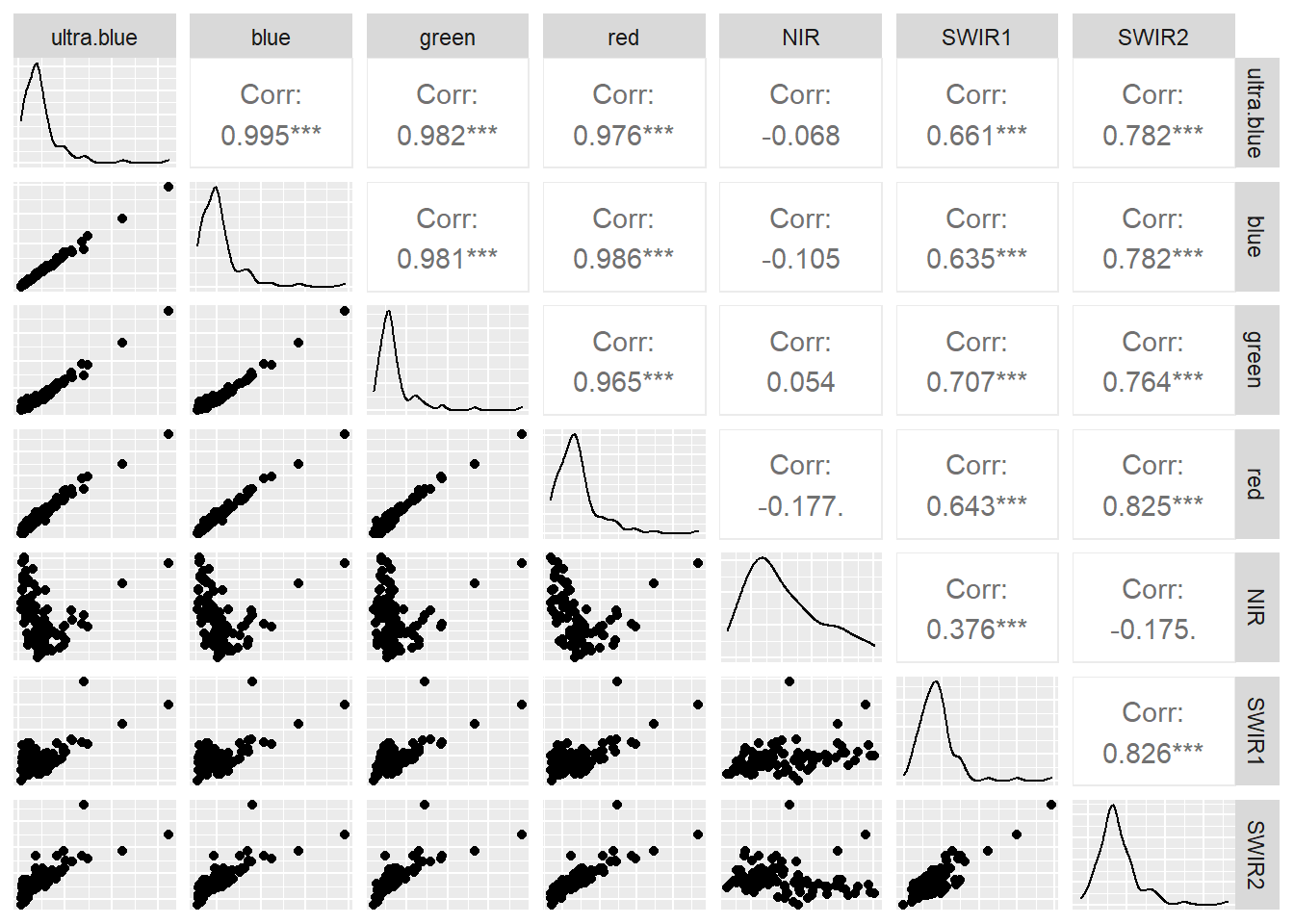

Low statistical significance means that the bands are sufficiently different enough in their wavelength reflectance to show different things in the image. We can also make this look a bit nicer with ggplot2 and GGally

library(ggplot2)

library(GGally)

manc %>%

terra::as.data.frame(., na.rm=TRUE)%>%

dplyr::sample_n(., 100)%>%

ggpairs(.,axisLabels="none")

You can do much more using GGally have a look at the great documentation

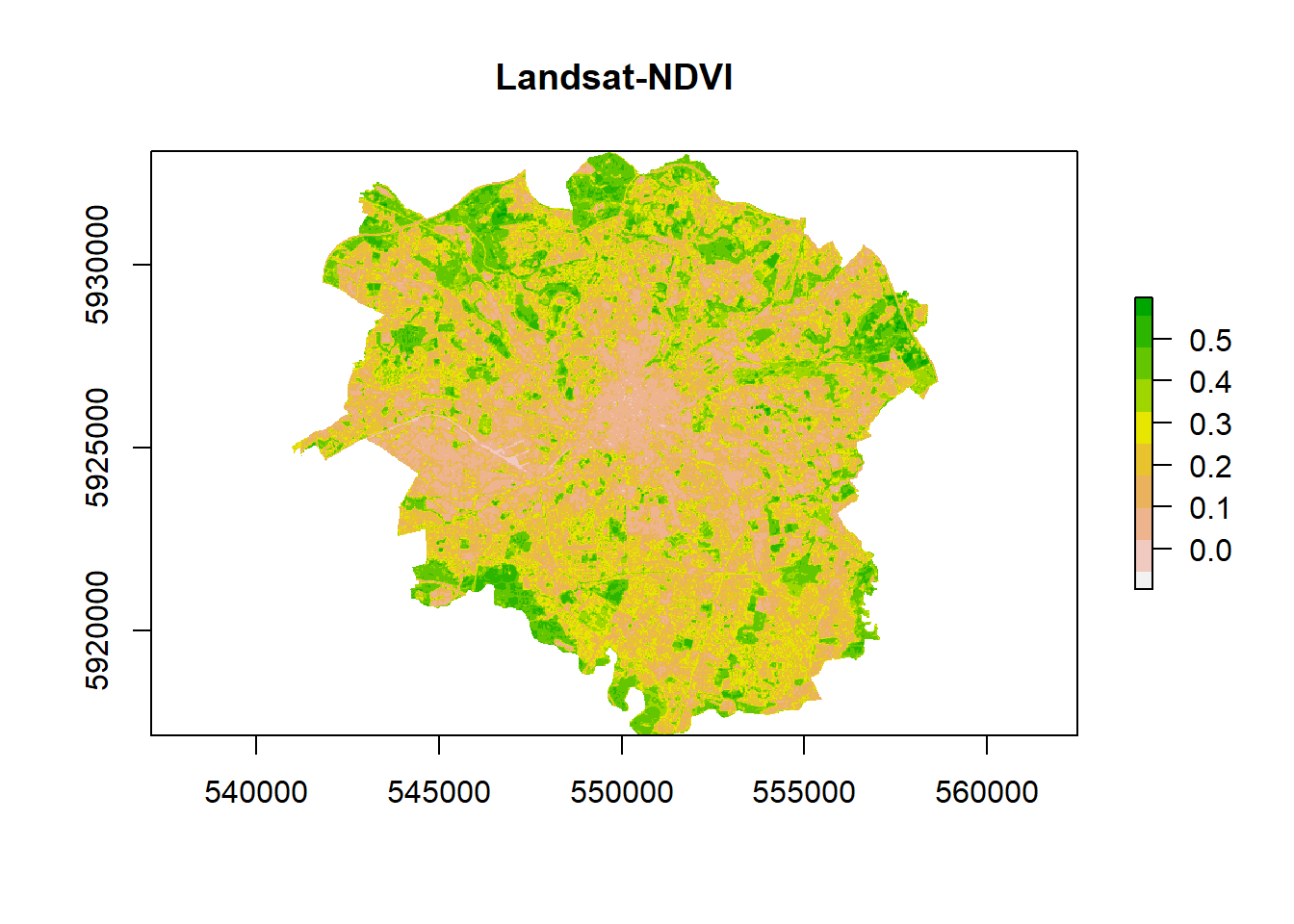

8.9 Basic raster calculations

Now we will move on to some basic advanced raster analysis to compute temperature from this raster data. To do so we need to generate additional raster layers, the first of which is NDVI

8.9.1 NDVI

Live green vegetation can be represented with the NIR and Red Bands through the normalised difference vegetation index (NDVI) as chlorophyll reflects in the NIR wavelength, but absorbs in the Red wavelength.

\[NDVI= \frac{NIR-Red}{NIR+Red}\]

8.9.2 NDVI function

One of the great strengths of R is that is lets users define their own functions. Here we will practice writing a couple of basic functions to process some of the data we have been working with.

One of the benefits of a function is that it generalises some set of operations that can then be repeated over and again on different data… the structure of a function in R is given below:

myfunction <- function(arg1, arg2, ... ){

statements

return(object)

}We can use NDVI as an example…

- Let’s make a function called

NDVIfun

NDVIfun <- function(NIR, Red) {

NDVI <- (NIR - Red) / (NIR + Red)

return(NDVI)

}Here we have said our function needs two arguments NIR and Red, the next line calculates NDVI based on the formula and returns it. To be able to use this function throughout our analysis either copy it into the console or make a new R script, save it in your project then call it within this code using the source() function e.g…

source('insert file name')- To use the function do so through…

ndvi <- NDVIfun(manc$NIR, manc$red)Here we call the function NDVIfun() and then provide the NIR and Red band.

- Check the output

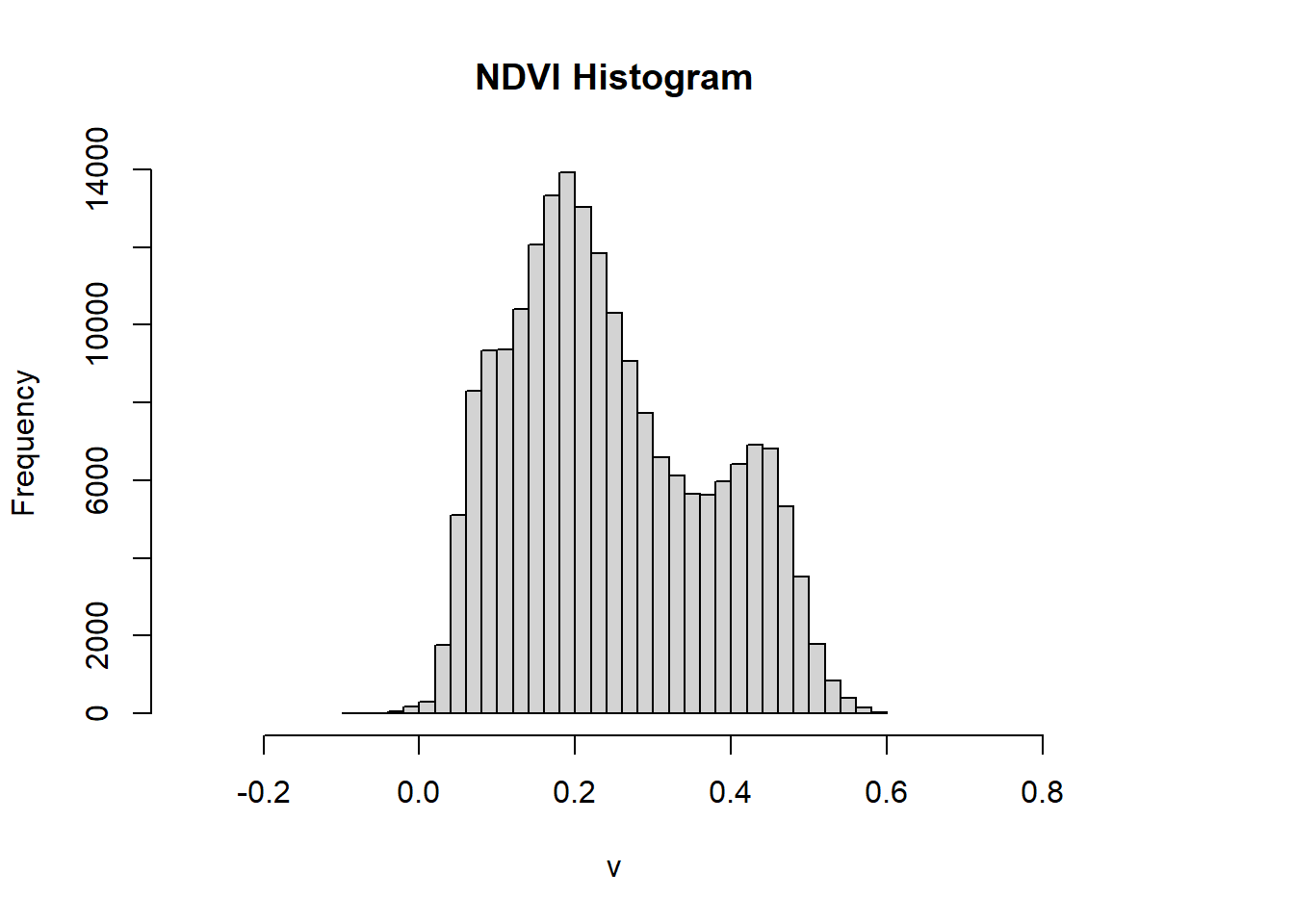

ndvi %>%

plot(.,col = rev(terrain.colors(10)), main = "Landsat-NDVI")

# Let's look at the histogram for this dataset

ndvi %>%

hist(., breaks = 40, main = "NDVI Histogram", xlim = c(-.3,.8))

- We can reclassify to the raster to show use what is most likely going to vegetation based on the histogram using the 3rd quartile — anything above the 3rd quartile we assume is vegetation.

Note, this is an assumption for demonstration purposes, if you were to do something similar in future analysis be sure to provide reasoning with linkage to literature (e.g. policy or academic)

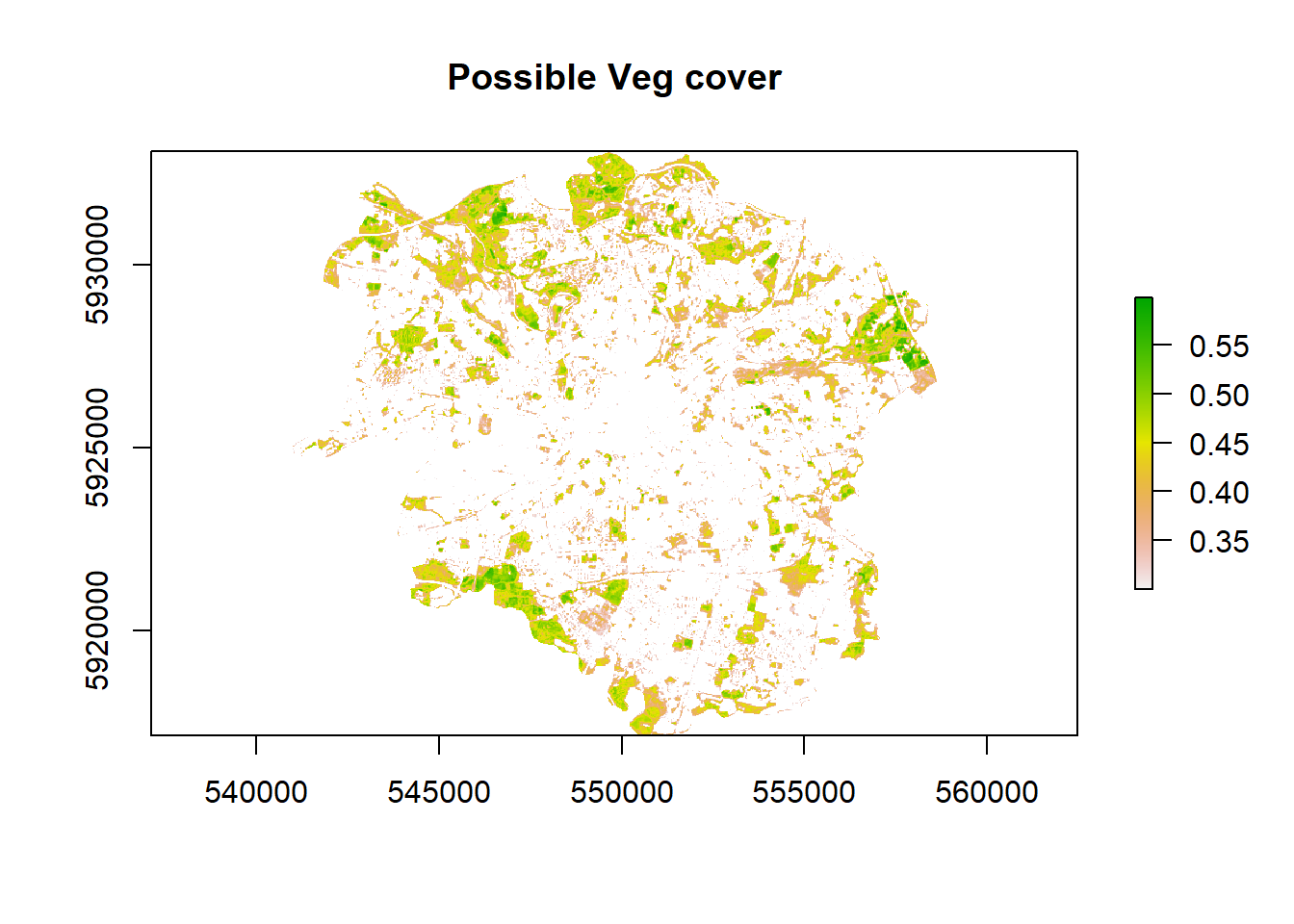

veg <- ndvi %>%

reclassify(., cbind(-Inf, 0.3, NA))

veg %>%

plot(.,main = 'Possible Veg cover')

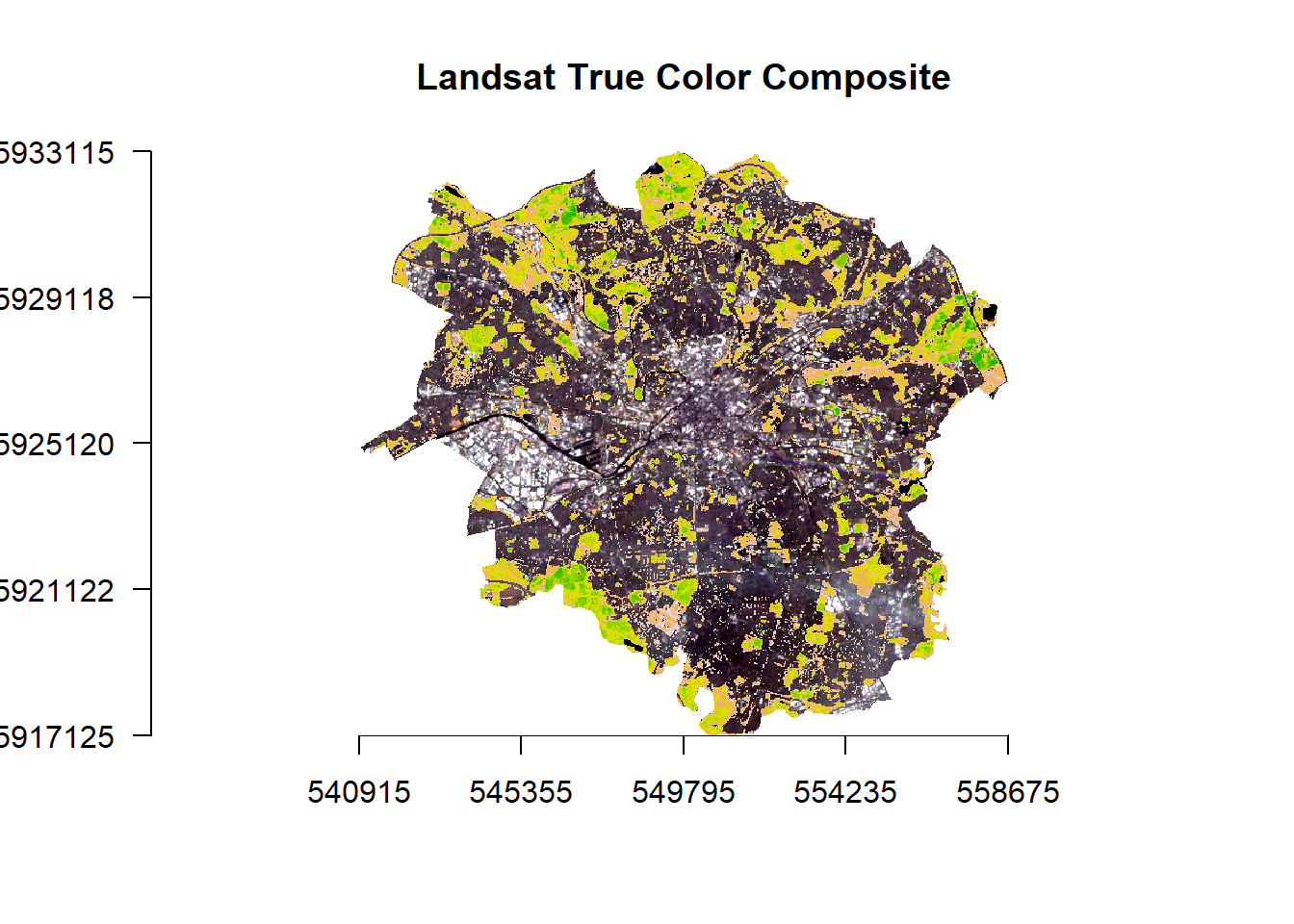

- Let’s look at this in relation to Manchester as a whole

manc_rgb %>%

plotRGB(.,axes = TRUE, stretch = "lin", main = "Landsat True Color Composite")

veg %>%

plot(., add=TRUE, legend=FALSE)

8.10 Advanced raster calculations

The goal of this final section is to set up a mini investigation to see if there is a relationship between urban area and temperature. If our hypothesis is that there is a relationship then our null is that there is not a relationship…

8.10.1 Calculating tempearture from Landsat data

Here we are going to compute temperature from Landsat data — there are many methods that can be found within literature to do so but we will use the one originally developed by Artis & Carnahan (1982), recently summarised by Guha et al. 2018 and and Avdan and Jovanovska (2016).

Some of the terms used our outlined in the remote sensing background section at the end of the document, so check back there if you get confused.

- Calculate the Top of Atmosphere (TOA) spectral radiance from the Digital Number (DN) using:

\[\lambda= Grescale * QCAL + Brescale\]

TOA spectral radiance is light reflected off the Earth as seen from the satellite measure in radiance units.

In this equation Grescale and Brescale represent the gain and bias of the image, with QCAL the Digital Number (DN) — how the raw Landsat image is captured. To go from DN to spectral radiance we use the calibration curve, created before the launch of the sensor. Bias is the spectral radiance of the sensor for a DN of 0, Gain is the gradient of the slope for other values of DN. See page 84 in RADIOMETRIC CORRECTION OF SATELLITE IMAGES: WHEN AND WHY RADIOMETRIC CORRECTION IS NECESSARY for further information.

Grescale and Brescale are available from the .MTL file provided when you downloaded the Landsat data. Either open this file in notepad and extract the required values for band 10 gain (MULT_BAND) and bias (ADD_BAND)

…Or we can automate it using the MTL() function within the RStoolbox package

library(RStoolbox)

MTL<-dir_info(here::here("prac8_data", "Lsatdata")) %>%

dplyr::filter(str_detect(path, "MTL.txt")) %>%

dplyr::select(path)%>%

pull()%>%

readMeta()

#To see all the attributes

head(MTL)- Now let’s extract the values from the readMTL variable for Band 10…we can either use the function

getMeta()fromRStoolboxof just extract the values ourselves…

offsetandgain <-MTL %>%

getMeta("B10_dn", metaData = ., what = "CALRAD")

offsetandgain## offset gain

## B10_dn 0.1 0.0003342##OR

offsetandgain <- subset(MTL$CALRAD, rownames(MTL$CALRAD) == "B10_dn")- Run the calculation using the band 10 raster layer

TOA <- offsetandgain$gain *

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B10_mask +

offsetandgain$offset- Next convert the TOA to Brightness Temperature \(T_b\) using the following equation:

\[T_b=\frac{K_2}{ln((K_1/\lambda)+1)}\]

Brightness temperature is the radiance travelling upward from the top of the atmosphere to the satellite in units of the temperature of an equivalent black body.

K1 (774.8853) and K2 (1321.0789) are pre launch calibration constants provided by USGS.

Check the handbook for these values

- Instead of hardcoding these values…yep, you guessed it… we can extract them from our

MTL

Calidata <- MTL$CALBT%>%

terra::as.data.frame()%>%

mutate(Band=rownames(.))%>%

filter(Band=="B10_dn")

# subset the columns

K1 <- Calidata %>%

dplyr::select(K1)%>%

pull()

K2 <- Calidata %>%

dplyr::select(K2)%>%

pull()

Brighttemp <- (K2 / log((K1 / TOA) + 1))Earlier we calculated NDVI, let’s use that to determine emissivity of each pixel.

- First we need to calculate the fractional vegetation of each pixel, through the equation:

\[F_v= \left( \frac{NDVI - NDVI_{min}}{NDVI_{max}-NDVI_{min}} \right)^2\]

facveg <- (ndvi-0.2/0.5-0.2)^2Fractional vegetation cover is the ratio of vertically projected area of vegetation to the total surface extent.

Here, \(NDVI_{min}\) is the minimum NDVI value (0.2) where pixels are considered bare earth and \(NDVI_{max}\) is the value at which pixels are considered healthy vegetation (0.5)

- Now compute the emissivity using:

\[\varepsilon = 0.004*F_v+0.986\]

emiss <- 0.004*facveg+0.986Emissivity is the ratio absorbed radiation energy to total incoming radiation energy compared to a blackbody (which would absorb everything), being a measure of absorptivity.

- Great, we’re nearly there… get our LST following the equation from Weng et al. 2004 (also summarised in Guja et al. (2018) and Avdan and Jovanovska (2016)):

\[LST= \frac{T_b}{1+(\lambda \varrho T_b / (p))ln\varepsilon}\]

Where:

\[p= h\frac{c}{\varrho}\]

Ok, don’t freak out….let’s start with calculating \(p\)

Here we have:

\(h\) which is Plank’s constant \(6.626 × 10^-34 Js\)

\(c\) which is the velocity of light in a vaccum \(2.998 × 10^8 m/sec\)

\(\varrho\) which is the Boltzmann constant of \(1.38 × 10^-23 J/K\)

Boltzmann <- 1.38*10e-23

Plank <- 6.626*10e-34

c <- 2.998*10e8

p <- Plank*(c/Boltzmann)Now for the rest of the equation….we have the values for:

\(\lambda\) which is the effective wavelength of our data (10.9 for Landsat 8 band 10)

\(\varepsilon\) emissivity

\(T_b\) Brightness Temperature

- Run the equation with our data

#define remaining varaibles

lambda <- 1.09e-5

#run the LST calculation

LST <- Brighttemp/(1 +(lambda*Brighttemp/p)*log(emiss))

# check the values

LST## class : RasterLayer

## dimensions : 533, 592, 315536 (nrow, ncol, ncell)

## resolution : 30, 30 (x, y)

## extent : 540915, 558675, 5917125, 5933115 (xmin, xmax, ymin, ymax)

## crs : +proj=utm +zone=30 +datum=WGS84 +units=m +no_defs

## source : memory

## names : layer

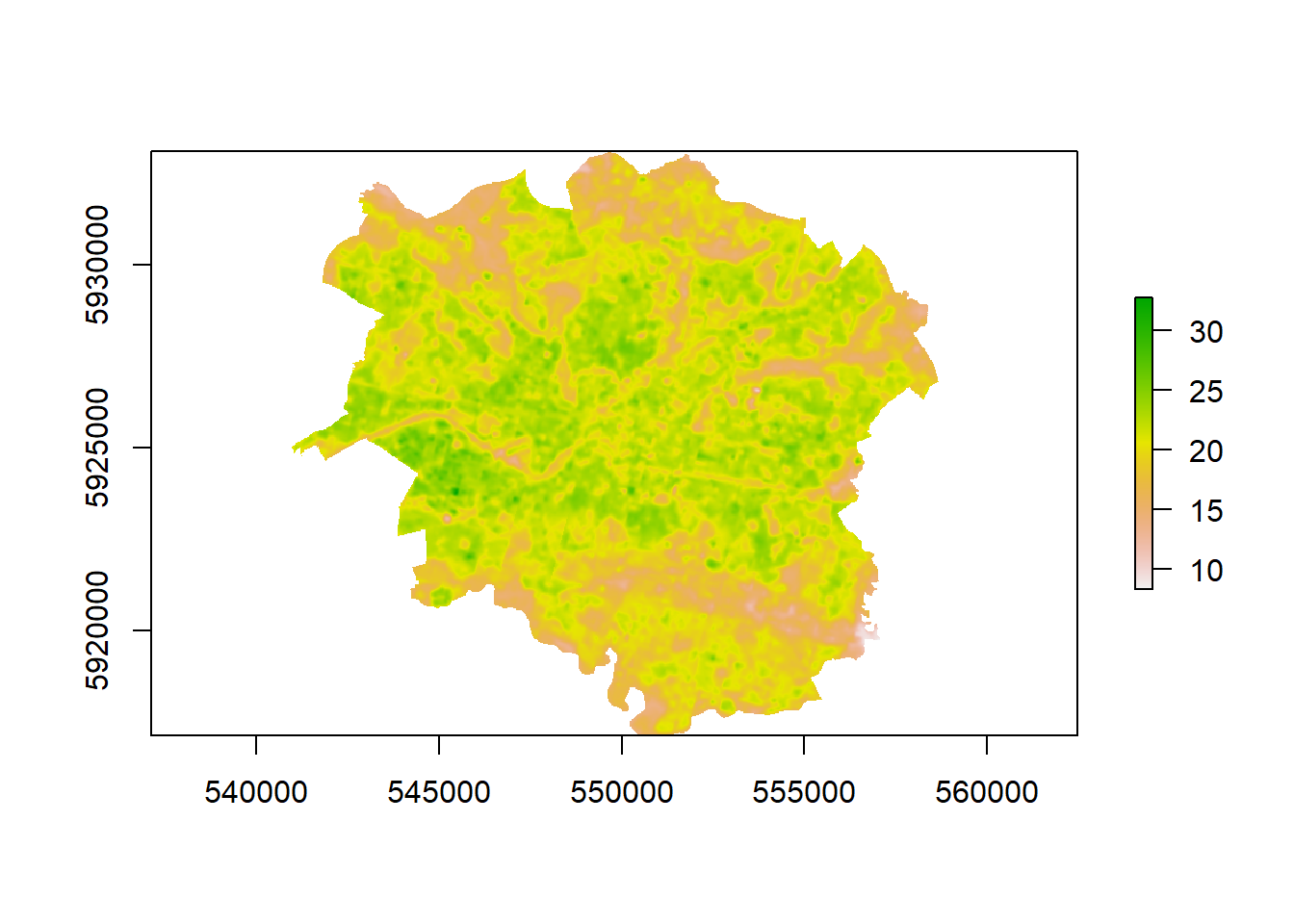

## values : 281.4855, 305.9571 (min, max)- Are the values very high?… That’s because we are in Kevlin not degrees Celcius…let’s fix that and plot the map

LST <- LST-273.15

plot(LST)

Nice that’s our temperature data sorted.

8.11 Calucating urban area from Landsat data

How about we extract some urban area using another index and then see how our temperature data is related?

We will use the Normalized Difference Built-up Index (NDBI) algorithm for identification of built up regions using the reflective bands: Red, Near-Infrared (NIR) and Mid-Infrared (MIR) originally proposed by Zha et al. (2003).

It is very similar to our earlier NDVI calculation but using different bands…

\[NDBI= \frac{Short-wave Infrared (SWIR)-Near Infrared (NIR)}{Short-wave Infrared (SWIR)+Near Infrared (NIR)}\]

In Landsat 8 data the SWIR is band 6 and the NIR band 5

- Let’s compute this index now…

NDBI=((lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B6_mask-

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B5_mask)/

(lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B6_mask+

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B5_mask))But do you remember our function? …Well this is the same calculation we used there just with different raster layers (or bands) so we could reuse it…

NDBIfunexample <- NDVIfun(lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B6_mask,

lsatmask$LC08_L1TP_203023_20190513_20190521_01_T1_B5_mask)8.12 Urban area and temperature relationship

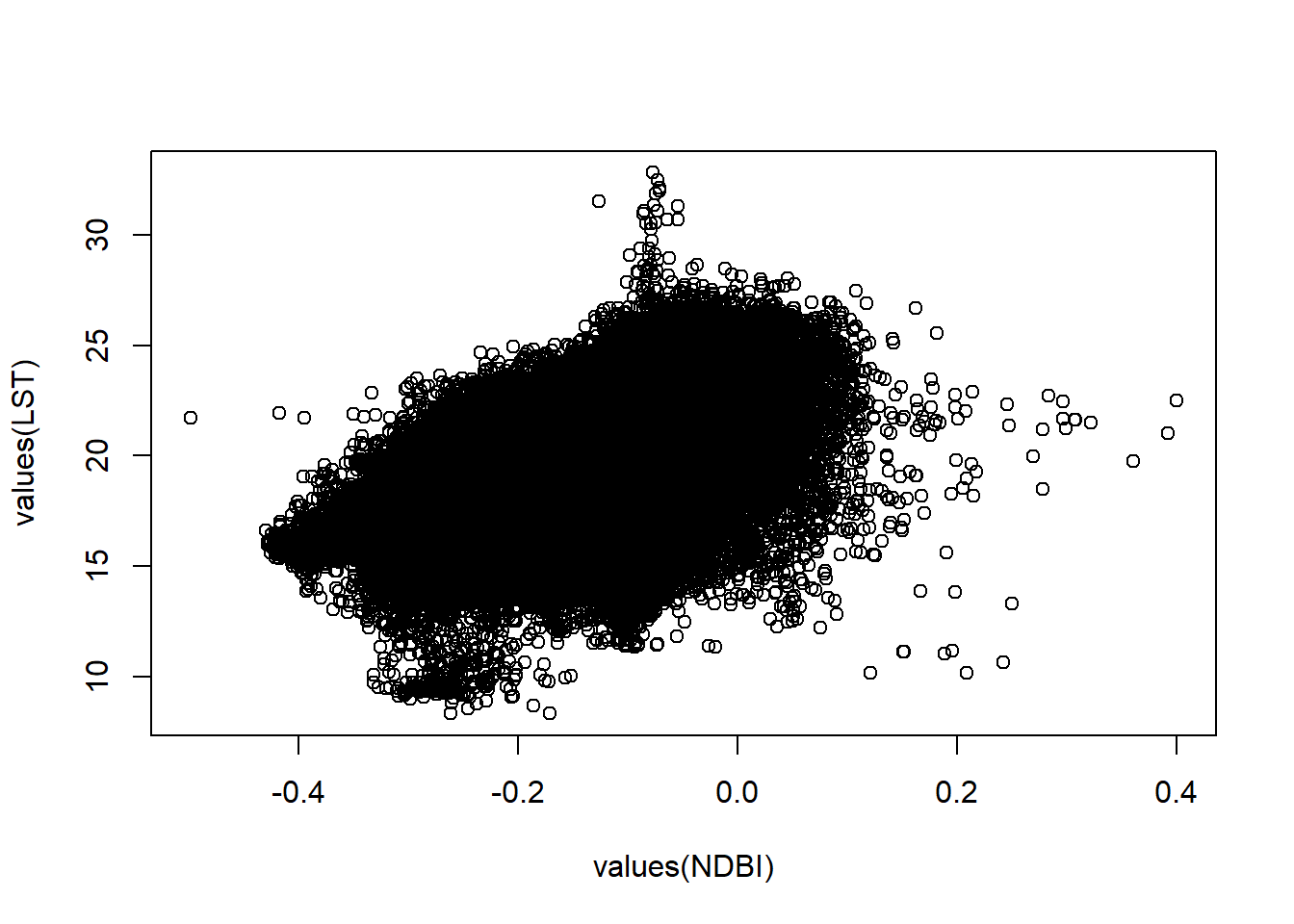

- We could plot the varaibles agaisnt each other but there are a lot of data points

plot(values(NDBI), values(LST))

This is termed the overplotting problem. So, let’s just take a random subset of the same pixels from both raster layers.

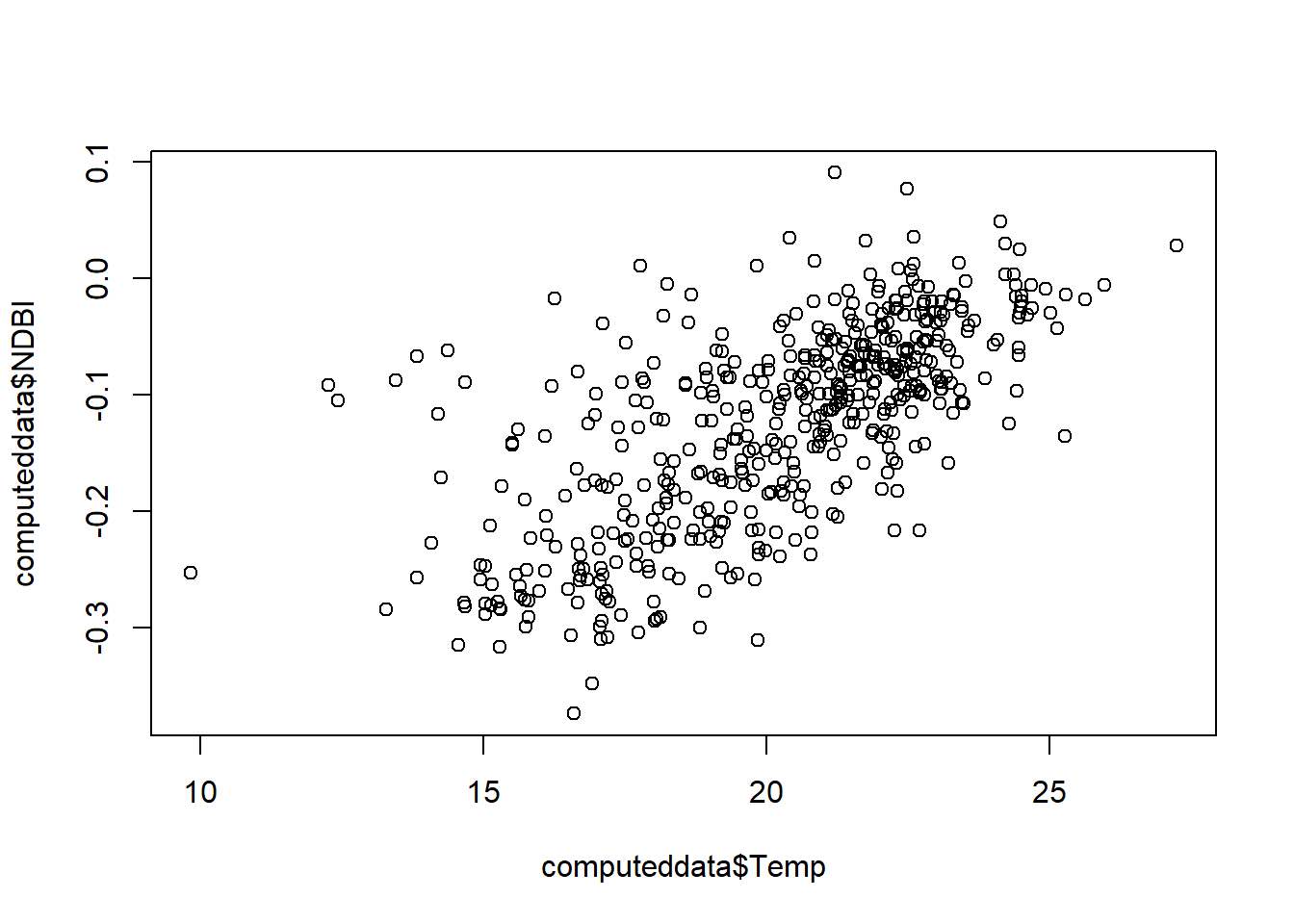

- To do so we need to again stack our layers

# stack the layers

computeddata <- LST%>%

stack(.,NDBI)%>%

terra::as.data.frame()%>%

na.omit()%>%

# take a random subset

dplyr::sample_n(., 500)%>%

dplyr::rename(Temp="layer.1", NDBI="layer.2")

# check the output

plot(computeddata$Temp, computeddata$NDBI)

- Let’s jazz things up, load some more packages

library(plotly)

library(htmlwidgets)- Transfrom the data to a data.frame to work with

ggplot, then plot

heat<-ggplot(computeddata, aes(x = NDBI, y = Temp))+

geom_point(alpha=2, colour = "#51A0D5")+

labs(x = "Temperature",

y = "Urban index",

title = "Manchester urban and temperature relationship")+

geom_smooth(method='lm', se=FALSE)+

theme_classic()+

theme(plot.title = element_text(hjust = 0.5))

# interactive plot

ggplotly(heat)## `geom_smooth()` using formula 'y ~ x'It’s a masterpiece!

](allisonhorst_images/ggplot2_masterpiece.png)

Figure 8.3: ggplot2 masterpiece. Source: Allison Horst data science and stats illustrations

- How about plotting the whole dataset rather than a random subset…

computeddatafull <- LST%>%

stack(.,NDBI)%>%

terra::as.data.frame()%>%

na.omit()%>%

# take a random subset

dplyr::rename(Temp="layer.1", NDBI="layer.2")

hexbins <- ggplot(computeddatafull,

aes(x=NDBI, y=Temp)) +

geom_hex(bins=100, na.rm=TRUE) +

labs(fill = "Count per bin")+

geom_smooth(method='lm', se=FALSE, size=0.6)+

theme_bw()

ggplotly(hexbins)8.13 Statistical summary

- To see if our variables are related let’s run some basic correlation

library(rstatix)

Correlation <- computeddatafull %>%

cor_test(Temp, NDBI, use = "complete.obs", method = c("pearson"))

Correlation## # A tibble: 1 × 8

## var1 var2 cor statistic p conf.low conf.high method

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <chr>

## 1 Temp NDBI 0.66 394. 0 0.660 0.665 PearsonLet’s walk through the results here…

p-value: tells us whether there is a statistically significant correlation between the datasets and if that we can reject the null hypothesis if p<0.05 (there is a 95% chance that the relationship is real).

cor: Product moment correlation coefficient

conf.low and con.high intervals: 95% confident that the population correlation coefficient is within this interval

statistic value (or t, or test statistic)

We can work out the critical t value using:

abs(qt(0.05/2, 198268))## [1] 1.959976Within this formula

0.05 is the confidence level (95%)

2 means a 2 sided test

198268 is the degrees of freedom (df), being the number of values we have -2

computeddatafull %>%

pull(Temp)%>%

length()## [1] 198270length(computeddatafull)## [1] 2Here, as our t values is > than the critical value we can say that there is a relationship between the datasets. However, we would normally report the p-value…

As p<0.05 is shows that are variables are have a statistically significant correlation… so as urban area (assuming the index in representative) per pixel increases so does temperature…therefore we can reject our null hypothesis… but remember that this does not imply causation!!

If you want more information on statistics in R go and read YaRrr! A Pirate’s Guide to R, chapter 13 on hypothesis tests.

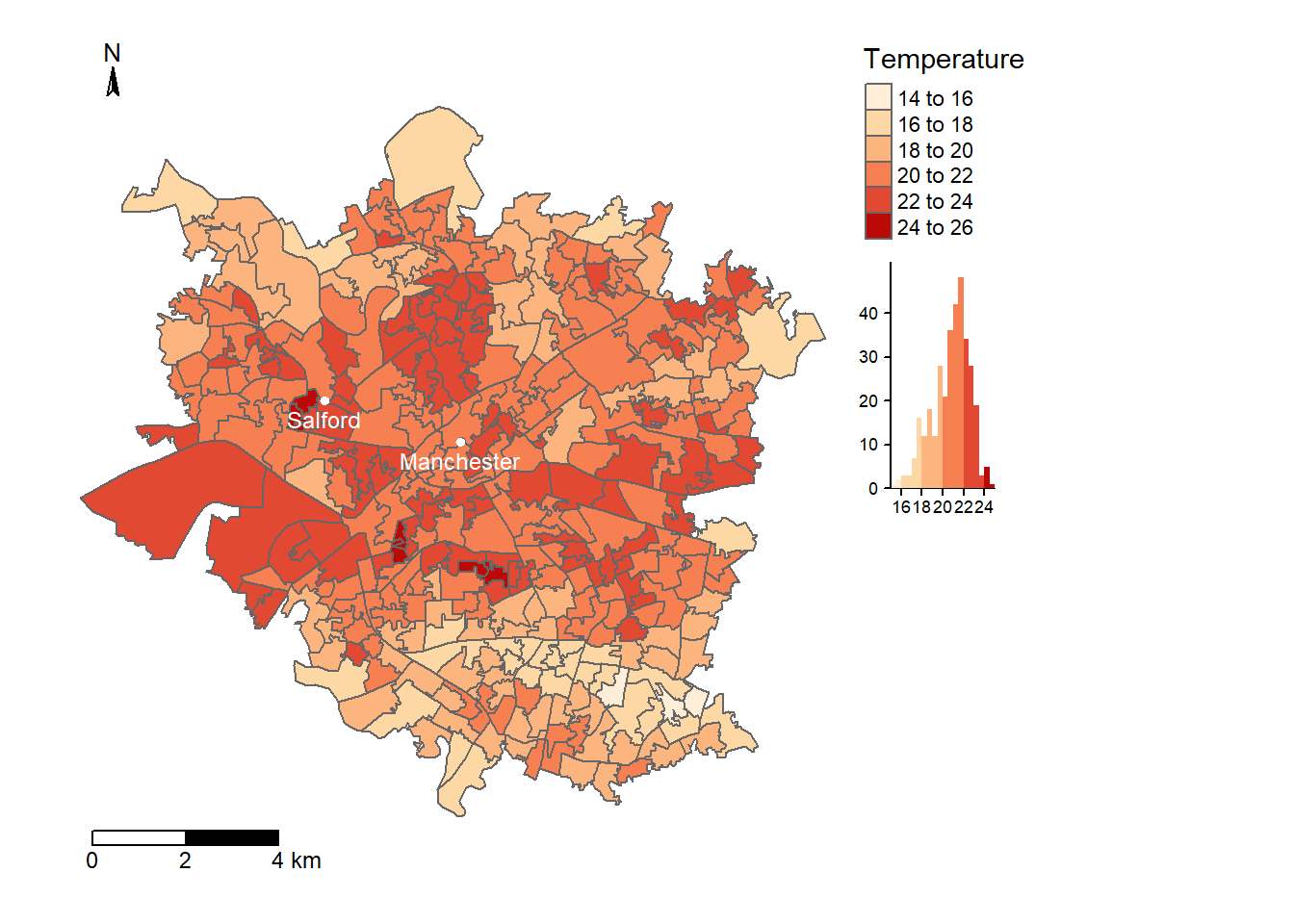

8.14 LSOA/MSOA stats

This is all useful, but what if we wanted to present this analysis to local leaders in Manchester to show which areas experience the highest temperature (remember, with the limitation of this being one day!)

Next, we will aggregate our raster data to LSOAs, taking a mean of the pixels in each LSOA.

LSOA data: https://data-communities.opendata.arcgis.com/datasets/5e1c399d787e48c0902e5fe4fc1ccfe3

Let’s prepare the new spatial data:

library(dplyr)

library(sf)

# read in LSOA data

UK_LSOA <- st_read(here::here("prac8_data",

"LSOA",

"Lower_Super_Output_Area_(LSOA)_IMD_2019__(OSGB1936).shp"))## Reading layer `Lower_Super_Output_Area_(LSOA)_IMD_2019__(OSGB1936)' from data source `C:\Users\Andy\OneDrive - University College London\Teaching\CASA0005\2022_2023\CASA0005repo\prac8_data\LSOA\Lower_Super_Output_Area_(LSOA)_IMD_2019__(OSGB1936).shp'

## using driver `ESRI Shapefile'

## Simple feature collection with 34753 features and 7 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: -6.418524 ymin: 49.86474 xmax: 1.762942 ymax: 55.81107

## Geodetic CRS: WGS 84# project it to match Manchester boundary

UK_LSOA <- UK_LSOA %>%

st_transform(., 32630)

# read in MSOA and project it

MSOA <- st_read(here::here("prac8_data",

"MSOA",

"Middle_Layer_Super_Output_Areas_December_2011_Generalised_Clipped_Boundaries_in_England_and_Wales.shp")) %>%

st_transform(., 32630)## Reading layer `Middle_Layer_Super_Output_Areas_December_2011_Generalised_Clipped_Boundaries_in_England_and_Wales' from data source `C:\Users\Andy\OneDrive - University College London\Teaching\CASA0005\2022_2023\CASA0005repo\prac8_data\MSOA\Middle_Layer_Super_Output_Areas_December_2011_Generalised_Clipped_Boundaries_in_England_and_Wales.shp'

## using driver `ESRI Shapefile'

## Simple feature collection with 7201 features and 6 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: 82675.02 ymin: 5343.575 xmax: 655606.3 ymax: 657533.7

## Projected CRS: OSGB 1936 / British National Grid#select only MSOA within boundary

manchester_MSOA <- MSOA[manchester_boundary, , op=st_within]

#select only LSOA that intersect MSOA

manchester_LSOA <- UK_LSOA[manchester_MSOA,]Next, we need to do the extraction with raster::extract(). fun() specifies how to summarise the pixels within the spatial unit (LSOA), na.rm()=TRUE ignores NA values and df=TRUE outputs the result to a dataframe.

# extract mean LST value per LSOA

LST_per_LSOA <- raster::extract(LST, manchester_LSOA, fun=mean, na.rm=TRUE, df=TRUE)

# add the LSOA ID back

LST_per_LSOA$FID<-manchester_LSOA$FID

# join the average temp to the sf

manchester_LSOA_temp <- manchester_LSOA %>%

left_join(.,

LST_per_LSOA,

by="FID")%>%

dplyr::rename(temp=layer)Now we have the temperature per LSOA, but what about the amount of land considered urban? Here, we will assume that any NDBI value above 0 means the whole pixel is considered urban. raster::extract() can also be used to get all the pixels within each spatial area (LSOA)..

#define urban as NDBI greater than 0

NDBI_urban<- NDBI > 0

# Sum the pixels that are grater than 0 per LSOA

NDBI_urban_per_LSOA <- raster::extract(NDBI_urban, manchester_LSOA, na.rm=TRUE, df=TRUE, fun=sum)

# list the pixels per LSOA

NDBI_per_LSOA_cells <- raster::extract(NDBI_urban, manchester_LSOA, na.rm=TRUE, df=TRUE, cellnumbers=TRUE)

#count the pixels per LSOA

NDBI_per_LSOA2_cells<- NDBI_per_LSOA_cells %>%

count(ID)

#add the LSOA ID to the urban area

NDBI_urban_per_LSOA$FID<-manchester_LSOA$FID

#add the LSOA ID to the number of cells

NDBI_per_LSOA2_cells$FID<-manchester_LSOA$FID

#join these two

Urban_info_LSOA <- NDBI_urban_per_LSOA %>%

left_join(.,

NDBI_per_LSOA2_cells,

by="FID")

# remove what you don't need and rename

Urban_info_LSOA_core_needed <- Urban_info_LSOA %>%

dplyr::rename(urban_count=layer,

LSOA_cells=n) %>%

dplyr::select(urban_count,

LSOA_cells,

FID)%>%

dplyr::mutate(percent_urban=urban_count/LSOA_cells*100)

# join the data

# one sf with temp and % urban per LSOA

manchester_LSOA_temp_urban <- manchester_LSOA_temp %>%

left_join(.,

Urban_info_LSOA_core_needed,

by="FID")8.15 Mapping

Now, we could map both temperature (and the % of urban area) within a LSOA individually….In our map we want to include some place names from Open Street Map, so let’s get those from OSM that is stored on Geofabrik

Places <- st_read(here::here("prac8_data",

"OSM",

"gis_osm_places_free_1.shp")) %>%

st_transform(., 32630)## Reading layer `gis_osm_places_free_1' from data source

## `C:\Users\Andy\OneDrive - University College London\Teaching\CASA0005\2022_2023\CASA0005repo\prac8_data\OSM\gis_osm_places_free_1.shp'

## using driver `ESRI Shapefile'

## Simple feature collection with 635 features and 5 fields

## Geometry type: POINT

## Dimension: XY

## Bounding box: xmin: -2.717277 ymin: 53.33978 xmax: -1.921855 ymax: 53.67854

## Geodetic CRS: WGS 84manchester_Places <- Places[manchester_boundary,]%>%

filter(fclass=="city")Let’s make a map, like we did earlier in the module using the tmap package, remember to add a caption in Rmarkdown include the argument fig.cap="caption here" in the code chunk header.

# this first bit makes the box bigger

# so we can have a north arrow not overlapping the data

# see: https://www.jla-data.net/eng/adjusting-bounding-box-of-a-tmap-map/

bbox_new <- st_bbox(manchester_LSOA_temp_urban) # current bounding box

yrange <- bbox_new$ymax - bbox_new$ymin # range of y values

bbox_new[4] <- bbox_new[4] + (0.1 * yrange) # ymax - top

bbox_new[2] <- bbox_new[2] - (0.1 * yrange) # ymin - bottom

# the plot starts here

library(tmap)

tmap_mode("plot")

# set the new bbox

# remove bbox=bbox_new to see the difference

tm1 <- tm_shape(manchester_LSOA_temp_urban, bbox = bbox_new) +

tm_polygons("temp",

palette="OrRd",

legend.hist=TRUE,

title="Temperature")+

tm_shape(manchester_Places, bbox=bbox_new)+

tm_dots(size=0.1, col="white")+

tm_text(text="name", size=0.75, ymod=-0.5, col="white", fontface = "bold")+

#tm_legend(show=FALSE)+

tm_layout(frame=FALSE,

legend.outside=TRUE)+

tm_compass(type = "arrow", size=1, position = c("left", "top")) +

tm_scale_bar(position= c("left", "bottom"), breaks=c(0,2,4), text.size = .75)

#tm_credits("(a)", position=c(0,0.85), size=1.5)

tm1

Figure 8.4: Average temperature per LSOA in Manchester, calcualted from Landsat imagery dated 13/5/19, following the methodology specified by Guha et al. 2018

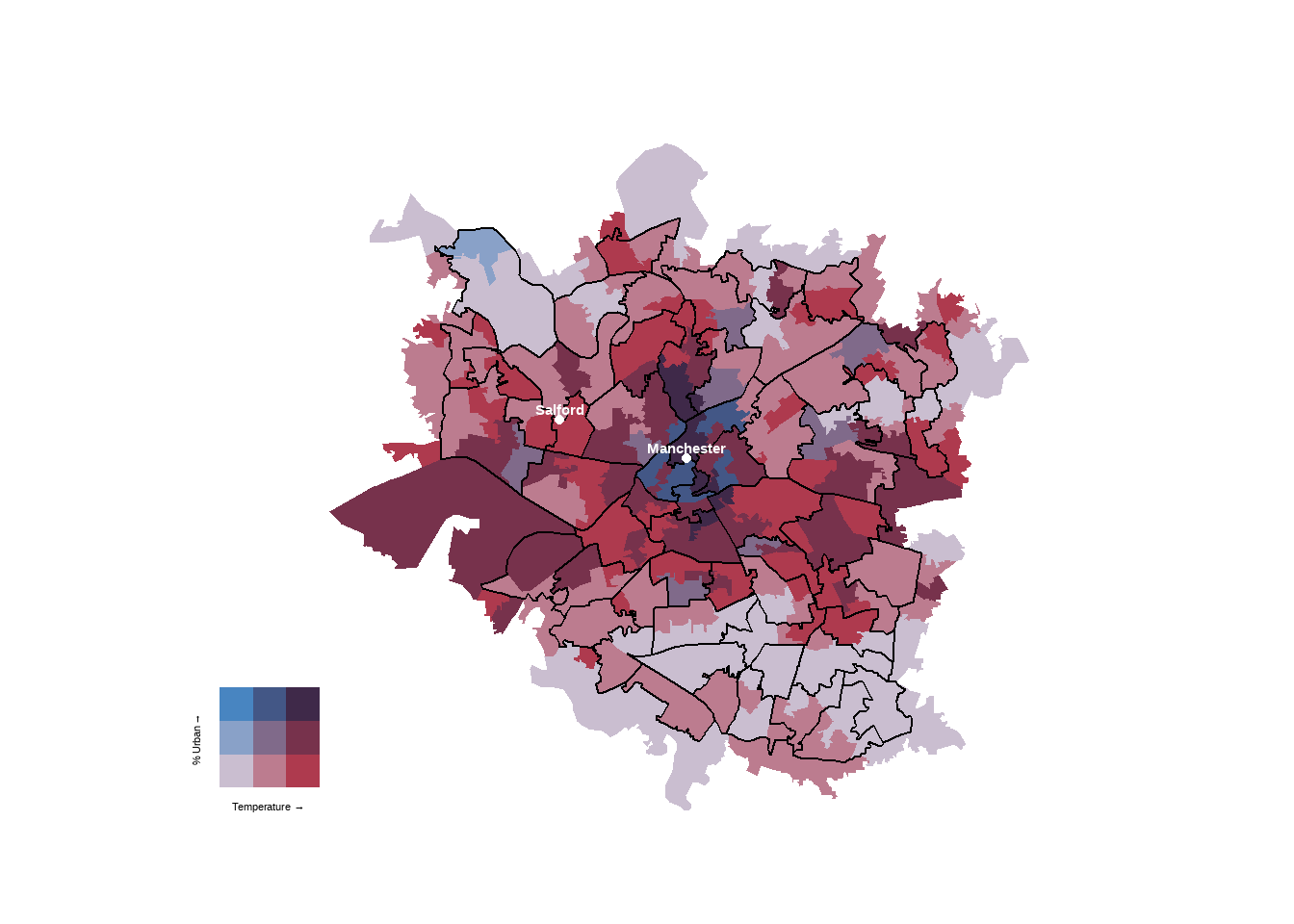

This could also be repeated for urban area and plotted in one figure, perhaps with an inset map like we previously did. But, we can also now plot two choropleth maps over each other, this is made much easier with the biscale() package, however, now we must use ggplot as opposed to tmap, you can force tmap to do it, but the feature is still in development.

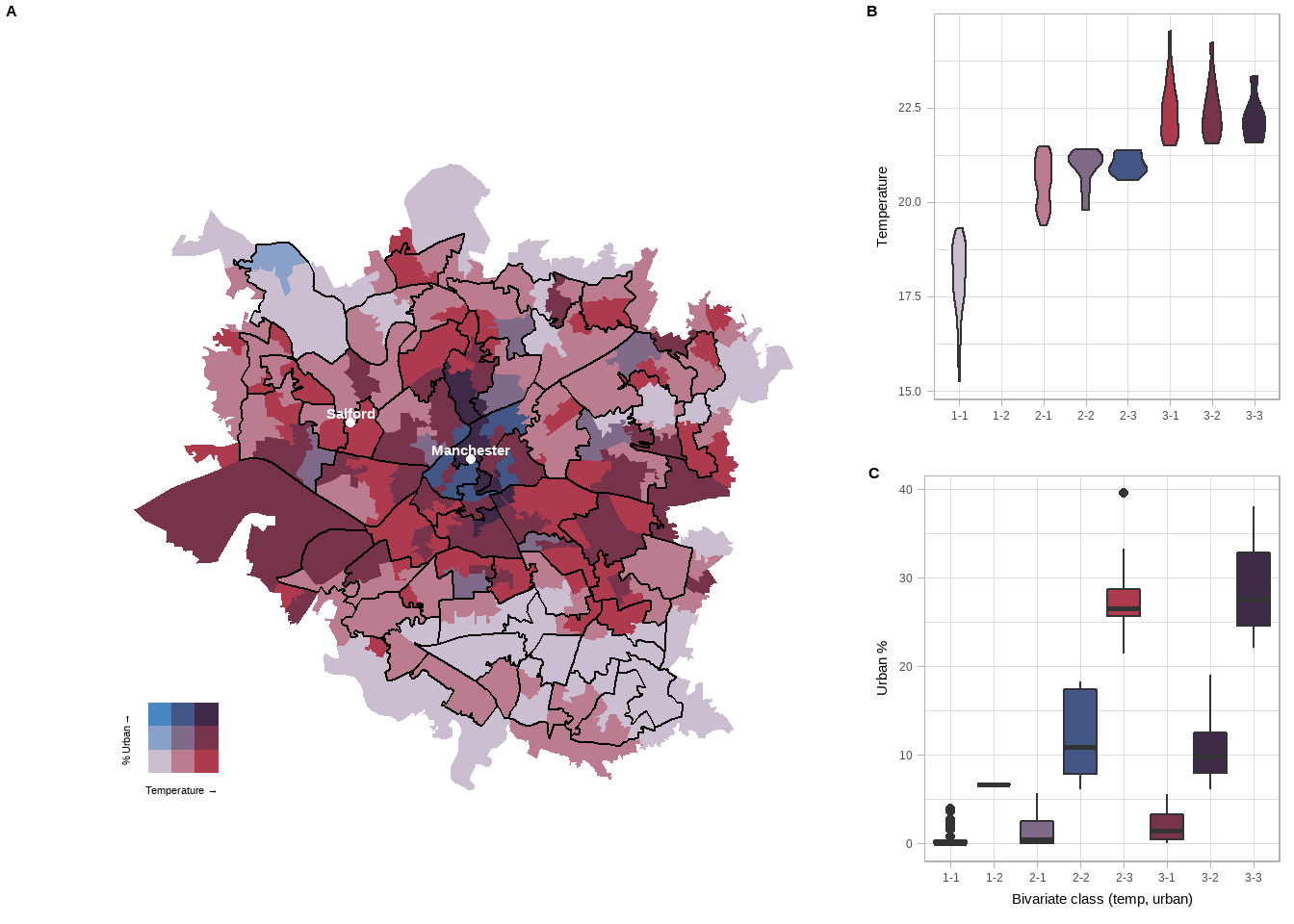

8.16 Bivariate mapping (optional)

Whilst bivaraite maps look cool, they don’t tell us anything about the actual data values, simply dividing the data into classes based on the style parameter which we have seen before - jenks, equal and so on. So here I want to produce:

- A central bivariate map of LSOAs within Manchester

- The central bivariate will have the MSOA boundaries and some place names

- Two plots showing the distribution of the data

library(biscale)

library(cowplot)

library(sysfonts)

library(extrafont)

library(showtext) # more fonts

#font_add_google("Lato", regular.wt = 300, bold.wt = 700) # I like using Lato for data viz (and everything else...). Open sans is also great for web viewing.

showtext_auto()

# create classes

data <- bi_class(manchester_LSOA_temp_urban, x = temp, y = percent_urban, style = "jenks", dim = 3)

#ggplot map

map <- ggplot() +

geom_sf(data = data, mapping = aes(fill = bi_class), color=NA, lwd = 0.1, show.legend = FALSE) +

bi_scale_fill(pal = "DkViolet", dim = 3) +

geom_sf(data = manchester_MSOA, mapping = aes(fill=NA), color="black", alpha=0, show.legend = FALSE)+

geom_sf(data=manchester_Places, mapping=aes(fill=NA), color="white", show.legend = FALSE)+

geom_sf_text(data=manchester_Places, aes(label = name, hjust = 0.5, vjust = -0.5),

nudge_x = 0, nudge_y = 0,

fontface = "bold",

color = "white",

show.legend = FALSE,

inherit.aes = TRUE)+

labs(

title = "",

x="", y=""

) +

bi_theme()

legend <- bi_legend(pal = "DkViolet",

dim = 3,

xlab = "Temperature ",

ylab = "% Urban",

size = 8)

credit<- ("Landsat dervied temperature and urban area, taken 13/5/19")

# combine map with legend

finalPlot <- ggdraw() +

draw_plot(map, 0, 0, 1, 1) +

draw_plot(legend, 0.1, 0.1, 0.2, 0.2)

#draw_text(credit, 0.68, 0.1, 0.2, 0.2, size=10)

finalPlot

That’s the main bivaraite plot, now to the side plots…

urban_box<-ggplot(data, aes(x=bi_class, y=percent_urban, fill=bi_class)) +

geom_boxplot()+

scale_fill_manual(values=c("#CABED0", "#BC7C8F", "#806A8A", "#435786", "#AE3A4E", "#77324C", "#3F2949", "#3F2949"))+

labs(x="Bivariate class (temp, urban)",

y="Urban %")+

theme_light()+

theme(legend.position="none") # Remove legend

temp_violin<-ggplot(data, aes(x=bi_class, y=temp, fill=bi_class))+

geom_violin()+

scale_fill_manual(values=c("#CABED0", "#BC7C8F", "#806A8A", "#435786", "#AE3A4E", "#77324C", "#3F2949", "#3F2949"))+

labs(x="",

y="Temperature")+

guides(fill=guide_legend(title="Class"))+

theme_light()+

theme(legend.position="none") # Remove legendJoin them all together in two steps - make the side plots, then join that to the main plot

side <- plot_grid(temp_violin, urban_box, labels=c("B","C"),label_size = 12, ncol=1)

all <- plot_grid(finalPlot, side, labels = c('A'), label_size = 12, ncol = 2, rel_widths = c(2, 1))Now this looks a bit rubbish on the page. Usually, you might want to export the map to a .png to use elsewhere, but you will need to resize it. I like to plot the map in the console (type all in the console then press enter), click export in the plots tab, reszie the image and note down the numbers then input them below..

all

dev.copy(device = png, filename = here::here("prac8_images", "bivaraite.png"), width = 687, height = 455) ## png

## 3dev.off()## png

## 2

8.16.1 Map notes

- The Manchester outline shapefile doesn’t match up with either the MSOA or LSOA boundaries, this could be solved by making sure the boundary data (e.g. city outline) matches any other spatial units.

- When selecting the data (within the Manchester boundary) any MSOA shape that was within the boundary selected

- Then any LSOA that intersected the MSOA layer was selected

- To resolve this you could clip the MSOA layer to the current displayed layer to force a boundary around the map

- A box plot was used as the data was very spread out in the urban % plot, so a violin plot didn’t show much.

8.17 Considerations

If you wanted to explore this type of analysis further then you would need to consider the following:

- Other methods for extracting temperature from Landsat data

- Other methods for identifying urban area from Landsat data such as image classificaiton

- The formula used to calculate emissivity — there are many

- The use of raw satellite data as opposed to remove the effects of the atmosphere. Within this practical we have only used relative spatial indexes (e.g. NDVI). However, if you were to use alternative methods it might be more appropriate to use surface reflectance data (also provided by USGS).

8.18 References

Thanks to former CASA graduate student Dr Matt Ng for providing the outline to the start of this practical

Avdan, U. and Jovanovska, G., 2016. Algorithm for automated mapping of land surface temperature using LANDSAT 8 satellite data. Journal of Sensors, 2016.

Guha, S., Govil, H., Dey, A. and Gill, N., 2018. Analytical study of land surface temperature with NDVI and NDBI using Landsat 8 OLI and TIRS data in Florence and Naples city, Italy. European Journal of Remote Sensing, 51(1), pp.667-678.

Weng, Q., Lu, D. and Schubring, J., 2004. Estimation of land surface temperature–vegetation abundance relationship for urban heat island studies. Remote sensing of Environment, 89(4), pp.467-483.

Young, N.E., Anderson, R.S., Chignell, S.M., Vorster, A.G., Lawrence, R. and Evangelista, P.H., 2017. A survival guide to Landsat preprocessing. Ecology, 98(4), pp.920-932.

Zha, Y., Gao, J. and Ni, S., 2003. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. International journal of remote sensing, 24(3), pp.583-594.

8.19 Remote sensing background (optional)

Landsat sensors capture reflected solar energy, convert these data to radiance, then rescale this data into a Digital Number (DN), the latter representing the intensity of the electromagnetic radiation per pixel. The range of possible DN values depends on the sensor radiometric resolution. For example Landsat Thematic Mapper 5 (TM) measures between 0 and 255 (termed 8 bit), whilst Landsat 8 OLI measures between 0 and 65536 (termed 12 bit). These DN values can then be converted into Top of Atmosphere (TOA) radiance and TOA reflectance through available equations and known constants that are preloaded into certain software. The former is how much light the instrument sees in meaningful units whilst the latter removes the effects of the light source. However, TOA reflectance is still influenced by atmospheric effects. These atmospheric effects can be removed through atmospheric correction achievable in software such as ENVI and QGIS to give surface reflectance representing a ratio of the amount of light leaving a target to the amount of light striking it.

We must also consider the spectral resolution of satellite imagery, Landsat 8 OLI has 11 spectral bands and as a result is a multi-spectral sensor. As humans we see in the visible part of the electromagnetic spectrum (red-green-blue) — this would be three bands of satellite imagery — however satellites can take advantage of the rest of the spectrum. Each band of Landsat measures in a certain part of the spectrum to produce a DN value. We can then combine these values to produce ‘colour composites’. So a ‘true’ colour composite is where red, green and blue Landsat bands are displayed (the visible spectrum). Based on the differing DN values obtained, we can pick out the unique signatures (values of all spectral bands) of each land cover type, termed spectral signature.

For more information read Young et al. (2017) A survival guide to Landsat preprocessing

8.20 Feedback

Was anything that we explained unclear this week or was something really clear…let us know using the feedback form. It’s anonymous and we’ll use the responses to clear any issues up in the future / adapt the material.